MCP (Model Context Protocol) is a new open protocol designed to standardize how applications provide context to Large Language Models (LLMs).

Think of MCP as a USB-C port for AI agents: it provides a uniform method for connecting AI systems to various tools and data sources.

This post breaks down MCP, clearly explaining its value, architecture, and how it differs from traditional APIs.

What is MCP?

The Model Context Protocol (MCP) is a standardized protocol that enables AI agents to connect with various external tools and data sources. Imagine it as a USB-C port – but for AI applications.

Just as USB-C simplifies how you connect different devices to your computer, MCP simplifies how AI models interact with your data, tools, and services.

Why use MCP instead of traditional APIs?

Traditionally, connecting an AI system to external tools involves integrating multiple APIs. Each API integration means separate code, documentation, authentication methods, error handling, and maintenance.

Why traditional APIs are like having separate keys for every door

Metaphorically Speaking: APIs are like individual doors – each door has its own key and rules:

Who's behind MCP?

MCP (Model Context Protocol) originated as a project by Anthropic ↗ to facilitate easier interaction between AI models, such as Claude, and tools and data sources.

But it’s not just an Anthropic thing anymore. MCP is open, and more companies and developers are joining the initiative.

It’s starting to look a lot like a new standard for AI-tool interactions.

MCP vs. API: Quick comparison

Key differences between MCP and traditional APIs

- Single protocol: MCP acts as a standardized “connector,” so integrating one MCP means potential access to multiple tools and services, not just one

- Dynamic discovery: MCP allows AI models to dynamically discover and interact with available tools without hard-coded knowledge of each integration

- Two-way communication: MCP supports persistent, real-time two-way communication – similar to WebSockets. The AI model can both retrieve information and trigger actions dynamically.

- Pull data: LLM queries servers for context → e.g., checking your calendar

- Trigger actions: LLM instructs servers to take actions → e.g., rescheduling meetings, sending emails

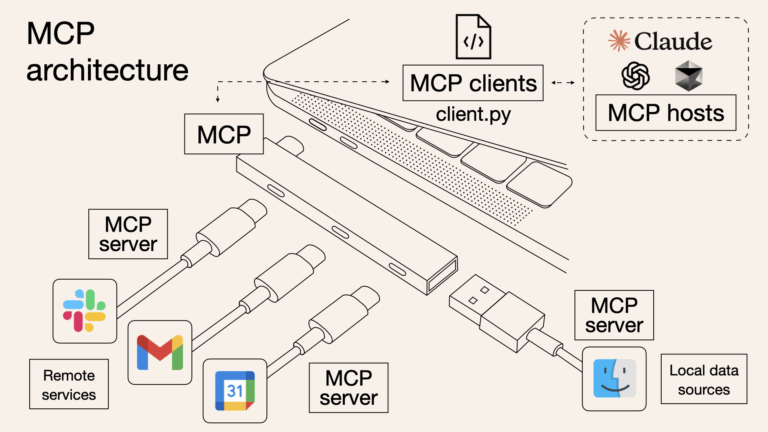

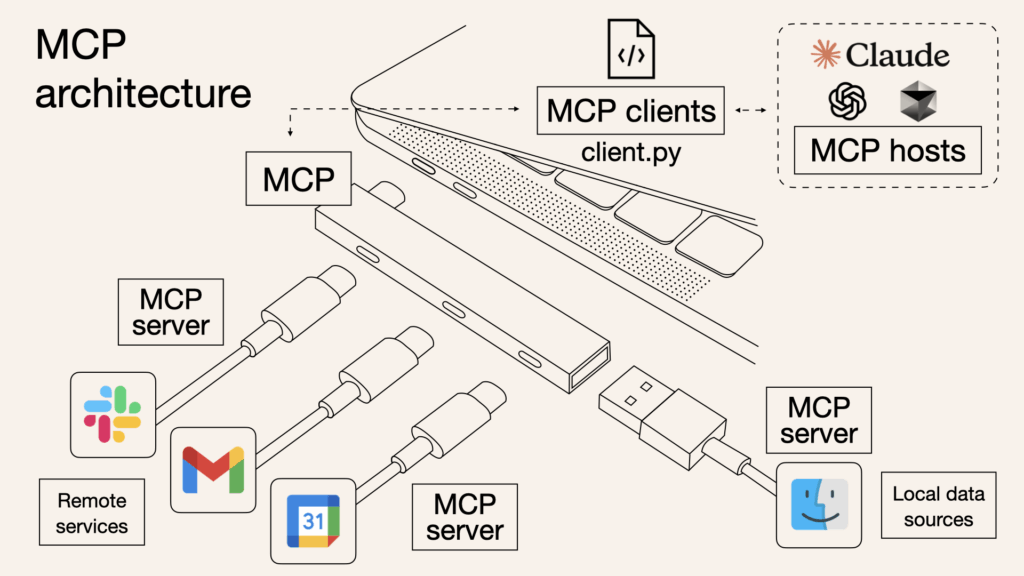

How MCP works: The architecture

- MCP Hosts: These are applications (like Claude Desktop or AI-driven IDEs) that need access to external data or tools

- MCP Clients: They maintain dedicated, one-to-one connections with MCP servers

- MCP Servers: Lightweight servers exposing specific functionalities via MCP, connecting to local or remote data sources

- Local Data Sources: Files, databases, or services securely accessed by MCP servers

- Remote Services: External internet-based APIs or services accessed by MCP servers

Visualizing MCP as a bridge makes it clear

MCP doesn’t handle heavy logic itself; it simply coordinates the flow of data and instructions between AI models and tools.

Benefits of implementing MCP

- Simplified development: Write once, integrate multiple times without rewriting custom code for every integration

- Flexibility: Switch AI models or tools without complex reconfiguration

- Real-time responsiveness: MCP connections remain active, enabling real-time context updates and interactions

- Security and compliance: Built-in access controls and standardized security practices

- Scalability: Easily add new capabilities as your AI ecosystem grows—simply connect another MCP server

When are traditional APIs better?

If your use case demands precise, predictable interactions with strict limits, traditional APIs could be preferable. MCP provides broad, dynamic capabilities ideal for scenarios requiring flexibility and context awareness, but may be less suited for highly controlled, deterministic applications.

Stick with granular APIs when:

- Fine-grained control and highly-specific, restricted functionalities are needed

- You prefer tight coupling for performance optimization

- You want maximum predictability with minimal context autonomy

Conclusion

MCP provides a unified and standardized way to integrate AI agents and models with external data and tools. It’s not just another API; it’s a powerful connectivity framework enabling intelligent, dynamic, and context-rich AI applications.